AI Fraud: Our Tips to Help You Protect Yourself from Digital Trickery

Artificial Intelligence is evolving and so are scams. Midcoast FCU is committed to providing you with the tools and education needed to help you safeguard yourself against financial exploitation. In this article, read our tips on how to recognize and report AI Impersonation scams.

AI Impersonation Scams are Booming

In today’s tech driven world, we interact with each other numerous times each day through a variety of channels. Think about it; today you might talk to a friend on the phone, click on a North Face ad in your Facebook feed, meet with your boss on video call, or speak with a customer service representative about your new dishwasher. These frequent interactions with family, friends, coworkers, and companies mean more touchpoints, which means more opportunities for potential vulnerabilities. “What vulnerabilities?” you ask? Introducing AI impersonation scams.

AI impersonation scams use advanced artificial intelligence to clone voices, mimic faces, and impersonate real people. A scammer can make a voice on a phone call sound identical to someone you trust, when it isn’t them. They can also manipulate videos to make a person appear to say and do anything they want. You might think Tom Cruise is personally encouraging you to buy that leather jacket, when it’s really the scammer. The world of AI impersonation capabilities is endless. It’s important to stay educated about AI impersonation tactics, and equally as important to share that knowledge with those around us.

Example 1:

Type of Scam: Family/Friends Scam – Phone .

Family and friends are often the people we trust most. We would also do anything to protect them. Scammers are now exploiting that trust by using AI and a short 30 second clip of a loved one’s voice to trick victims into sending them money. They impersonate children, grandchildren, great grandchildren, friends, the list goes on. In one recent scenario, in Birmingham Alabama, an elderly couple received a call that sounded just like their grandson. The caller claimed to have been in a car accident and was going to jail. The scammer gave them a case number and the name of an assigned attorney, which added credibility to the scam. The caller stated that he was in pain and promised to pay them back if they helped. Another call came from the “attorney” insisting over $11,000 in bail money. The couple later found out that the scammer had used AI to clone their grandson’s voice off of his Tiktok videos.

Common Patterns: Family/Friend Impersonations

- Calls are always urgent. The person sounds emotional or in a rush, but the background noise feels fabricated or dull. They may even say “Don’t call anyone else”.

- Usually involves a scenario where the individual needs immediate help, such as an accident, unexpected medical expenses, bail, etc.

- They refuse to switch to video chat or meet you in person (It’s because they can’t).

* If you receive an unsolicited call, text, or email claiming to be from Midcoast FCU asking for ANY of your personal or account information, HANG UP and/or DELETE THE MESSAGE. Contact us to report or verify the call, or if you feel your information has been compromised. We can assist you with next steps. 877.964.3262

Common Patterns: Family/Friend Impersonations

They walk you through “security steps”.

They claim to be from “financial institution support” or a “tech support department” and reference supposed “suspicious activity”.

They urge immediate action and offer to “help” secure the account.

Example 2:

Type of Scam: Financial Institution – Phone, text, email spoofing and cloned voices

In this very popular and ever-increasing scam, fraudsters are leveraging AI to impersonate credit union (or bank) employees and steal money through phone, text, and email interactions. According to experts, they “clone voices, generate convincing scripts, and respond dynamically to victims’ questions in a way that feels like a real customer service interaction”. Typically, the scam starts with a text, phone call, or email from someone claiming to be from their bank or credit union’s support team. They tell them their account is at risk and needs immediate attention. The “support agent” then guides them through alleged “security steps” that eventually give them control of their accounts. Fraudulent security steps that include:

- Requests for personal information. Legitimate organizations will NEVER ask for sensitive information, like passwords, Social Security numbers or credit card details, by email, text or phone call.

- Requests for account information. Legitimate organizations already know your full account number and don’t need your PIN number, or your credit card’s CVV number on the back to assist you.

- Requests to download an alternate “Security App.” Scammers use AI to create fake mobile apps that mimic financial institution interfaces, tricking members into giving them direct access to their computer or phone.

Example 3:

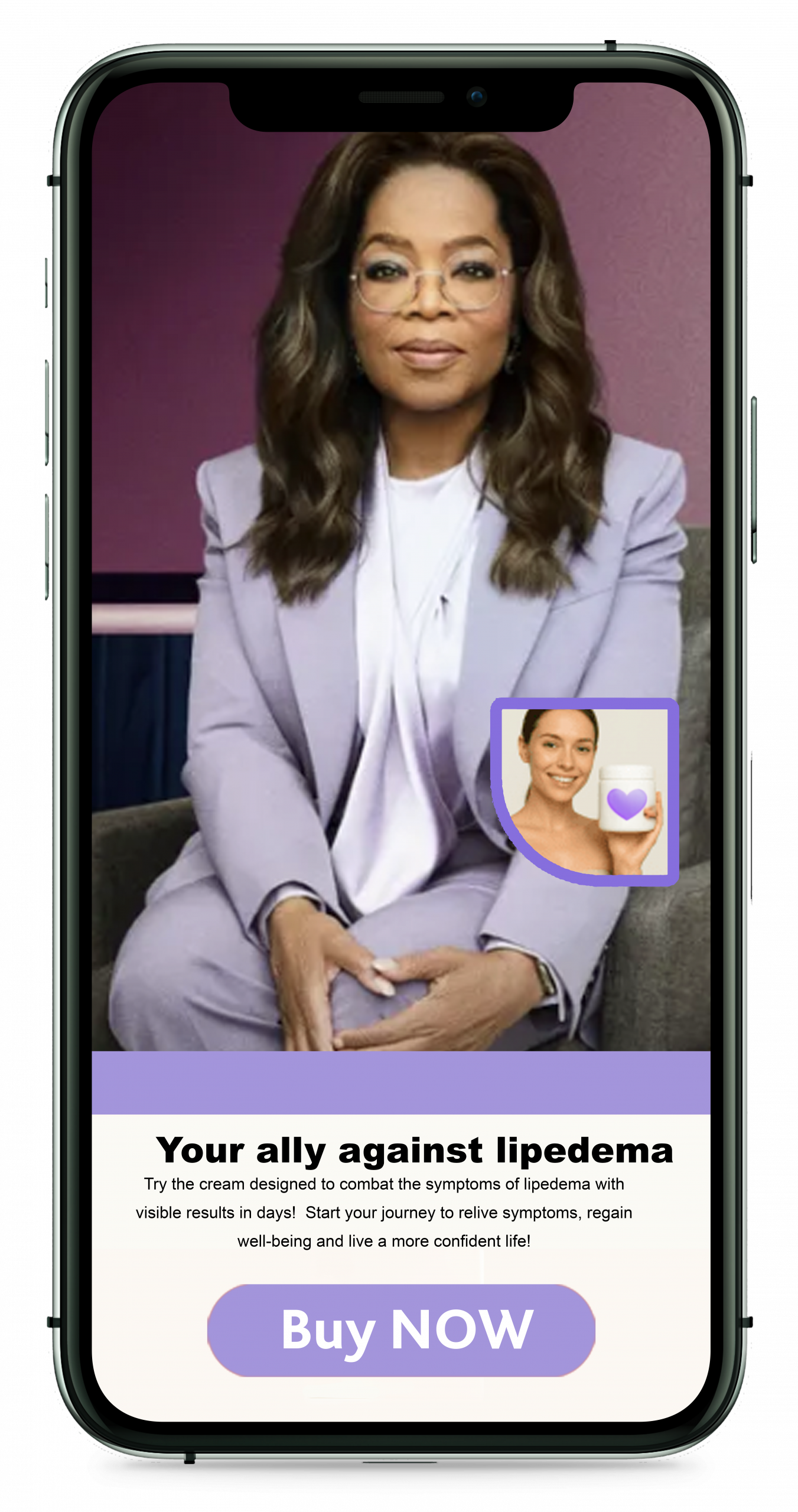

Type of Scam: Facebook Ad with Deepfake of Doctor and Celebrities Endorsements

Social media reels may not be real! Fraudsters are known to use AI Deepfake technology to create videos of doctors and celebrities endorsing everything from diet pills to medical treatments. In a recent Facebook ad scam highlighted by the Today show, a woman and long-time sufferer of lipedema, was convinced by a handful of testimonials, both by well-known celebrities (Oprah Winfrey, Kelly Clarkson and Carnie Wilson) and even more convincing, a featured testimonial by the woman’s own doctor, board-certified lipedema surgeon Dr. David Amron, to purchase a “miracle cream” costing over $600. She later found out that the footage of Amron and the celebrities was pirated off the web and digitally manipulated to lure in hopeful patients. The testimonials were all “deepfakes.” She lost out on her $600 and received a mystery cream that did not fix her lipedema.

Common Patterns: Ad Impersonations

- Scam ads often sound emotionless, using phrases that feel repetitive or generic.

- URLs are incorrect or contain errors and don’t match the brand’s official website URL.

- Influencer and celebrity endorsements often appear unnatural or out of their character.

Tips to Avoid/Protect Yourself from AI Impersonation Scams:

- Let unfamiliar or suspicious numbers ring to voicemail. This step alone can cut scams short almost immediately.

- Simply slow down. The success of a scammer depends on their ability to cause a sense of urgency and to emotionally manipulate. Before you act, stop. No legitimate request should pressure you to take instant action.

- Secure your accounts. Use tools such as multi-factor authentication (MFA) to help protect your accounts and be sure to enable real-time alerts for suspicious activity.

- If it’s an ad or a message, check the authenticity of the content before engaging with it. Look for peculiar signs of manipulation like unrealistic promises or strange phrasing. Don’t be afraid to look at the reviews and comments, they can be lifesavers.

- Watch for subtle red flags. Deepfake videos and AI generated voices can have small but telling flaws. Watch for unnatural mouth movements, flickering backgrounds, or eye contact that feels off. AI-generated voices can also have random pauses or inconsistent background noise. If something doesn’t feel right, trust your instincts.

What to Do if you Suspect You or Someone Else Is Potentially in a Scam:

- If you answer a suspicious call or video from someone you know, hang up and call them back on the number you already have. Scammers rely on people reacting in the moment, and calling back eliminates the urgency.

- Reach out to another family member or friend to see if they received the same information. If they haven’t heard something similar or seem confused, it’s likely a scam.

- If even a small part of you is skeptical, stop engaging and never provide any personal information. Consider blocking the number or address to prevent future attempts.

- Report a suspected AI Impersonation scam to the FBI’s Internet Crime Complaint Center and the Federal Trade Commission at ftc.gov/complaint

- If you have transferred funds to someone and suspect you are a victim of an AI Impersonation scam, contact your financial institution immediately. Quick action may help stop the transfer or possibly help regain your funds.